by Katie Allen

Since 2022, Registered Teacher Apprenticeship (RTA) has become a new model of interest to many state and local education agencies as they seek to increase the number of highly qualified, licensed teachers. RTA is an on-the-job paid training model with mentoring that utilizes a competency-based framework in tandem with higher education preparation to develop the skills and knowledge required to be a successful teacher.

In the spring of 2023 with passage of Kansas House Bill (HB) 2292 to promote workforce development by enacting the Kansas apprenticeship act, the Kansas State Department of Education’s Teacher Licensure Department embarked on a mission to design and implement a pilot RTA program to increase the number of qualified, credentialed teachers in the state of Kansas. The pilot cohort consists of fourteen apprentices and mentors from six school districts; and the state seeks to scale this model to be able to serve 500 apprentices starting in August 2024. The Region 12 Comprehensive Center is supporting the Kansas State Department of Education’s pilot RTA program through technical assistance and capacity building for evaluation.

Innovative initiatives such as RTA have great potential to increase the number and quality of highly-qualified teachers, and it is important to both understand implementation across multiple sites and to gauge the effectiveness of planned activities such as recruitment into the program, required instructional training, and mentoring. Robust evaluation provides accountability and serves as a means of continuous improvement.

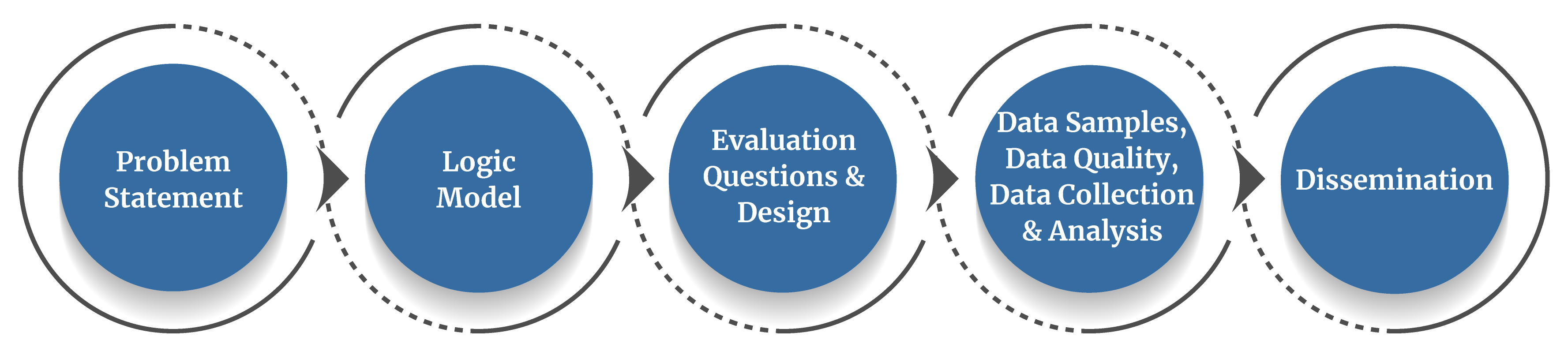

In this blog post, we delve into the significance of program evaluation planning and highlight the value of using the REL Central Program Evaluation Toolkit[1] to support capacity building for practitioners. The toolkit provides eight modules that each include a video lesson that overviews the topic and resources to support an independent evaluation planning process. Topics support the development of your program’s logic model, evaluation questions, evaluation design, sampling, data quality, data collection, data analysis, and dissemination.

Evaluation Planning

A priority for developing an evaluation of RTA was supporting SEA staff to plan for and implement the evaluation independently in future years. To support the staff in designing and implementing a program evaluation, R12CC utilized the REL Central Program Evaluation Toolkit. This toolkit provides step-by-step guidance and serves to promote shared language of evaluation among the SEA staff. This was important as staff had varying knowledge and experience with program evaluation.

Step 1: Problem Statement

Our first step was to create an internal timeline based upon the modules within the toolkit to guide a cadence of team planning and progress toward the development of a comprehensive evaluation plan. Then, team members reviewed toolkit modules on their own, and then met biweekly to work through each piece to create Kansas’ RTA evaluation plan starting with the development of a problem statement.

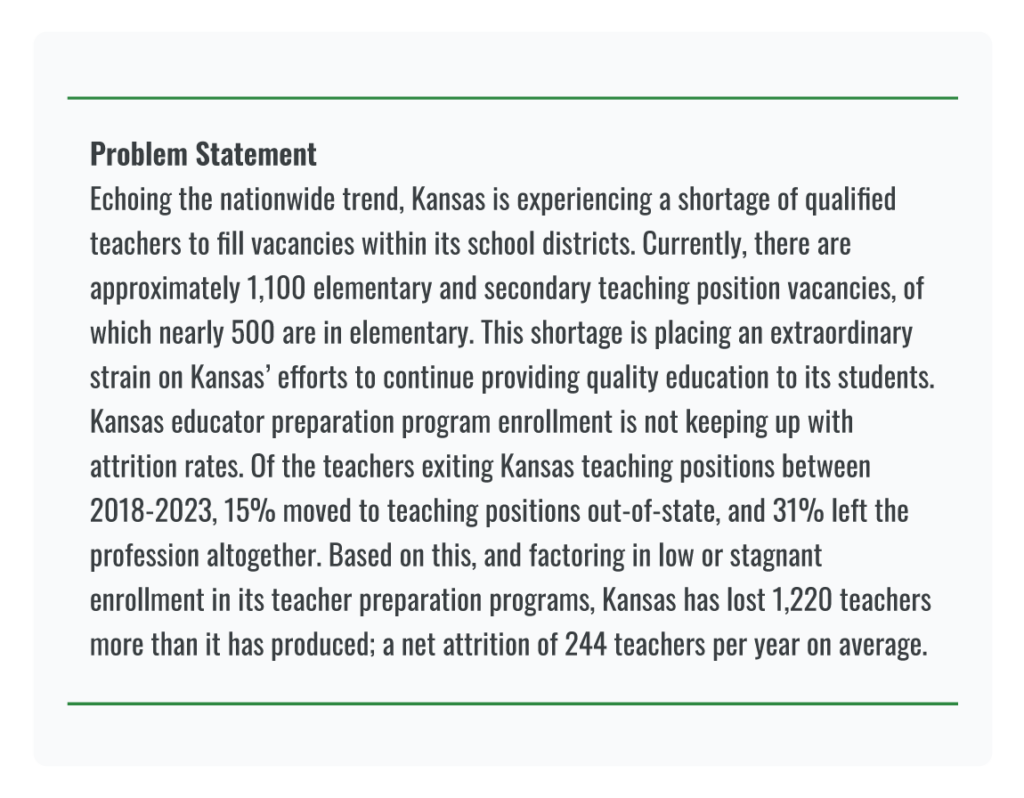

The problem statement provides a clear, succinct explanation of what challenges or issues are being experienced. Using state-level data, we described the number of teaching vacancies as well as attrition and teacher preparation program enrollment to illustrate why there is a need for this new model of teacher preparation. Once the problem to be addressed was defined, we moved into development of the logic model.

Step 2: Logic Model

The program logic model is a graphic organizer that shows how an initiative is intended to work and why it is a good solution to create the desired change. Starting with defining the long-term outcomes, or the desired overall change, the components of a logic model show the relationships between the program resources, the outputs that will be produced, and the short and medium-term changes expected as a result of implementation.

In the Kansas RTA logic model, it was important to visualize how various resources (e.g., funding mechanisms) connected to activities. Illustrating the connections between resources/inputs and outputs supports understanding that RTA utilizes various sources for different project components. For example, the state has several different teacher scholarship programs that feed into RTA and it is important to recognize that without these, RTA would not be able to operate. Similarly, the resources that partner organizations such as the statewide teachers union contribute are detailed and connected to specific activities and outputs.

One important piece of a logic model is to outline the intended results, or outcomes to achieve through the work. The intended outcomes reflect program goals and become the indicators of success that will be measured through the evaluation. For our purposes, we described outcomes in terms of what we expect to achieve within one year (short-term), three years (medium-term), and in the long-term (4+ years of implementation). When crafting outcomes, we utilized language embedded in HB 2292 to ensure that evaluation reporting will align to legislative mandates and looked to similar state-level teacher preparation programs to set targets (e.g., apprentice retention is higher than the state average for other new teachers).

Step 3: Evaluation Questions and Design

Once the program was described in the logic model, we began to look at the questions the state wanted to answer about implementation and outcomes. Because of the nature of this pilot apprenticeship program and the intention for evaluation to support continuous improvement and scaling, we determined that the evaluation design should be descriptive rather than experimental (i.e., random assignment to the program) or correlational (e.g., assessing variables such as prior experience related to program retention). To capture a comprehensive list of evaluation questions, we first referenced the samples in the toolkit designed to capture process and outcomes, and then we held several brainstorming sessions using the logic model to determine what evaluation questions needed to be asked.

Step 4: Evaluation Samples, Data Quality, Data Collection, and Data Analysis

Determining who to engage in the evaluation, what data already existed, and what would need to be collected was the next step. We developed a matrix for each evaluation question to be answered that detailed who to collect data from, how to collect data, and how to analyze that data to answer the question. Additionally, we determined a timeline for when each evaluation question could be answered. For example, we know that any questions related to long-term outcomes will be measured no earlier than year 4 of the program.

Step 5: Dissemination

Although this is the last module in the toolkit, we started evaluation planning with discussions of various report audiences as well as the overall purpose for program evaluation. Carefully defining who needs to be informed of evaluation results and for what purpose is key to developing the right level and format for sharing information. For example, providing information to funders will look different than sharing program results with potential participants as a recruitment tool. As we finish up collaborative evaluation planning, we intend to develop a comprehensive approach for sharing results of the evaluation.

Summary

Using REL Central’s Program Evaluation Toolkit provided the R12CC with a framework to approach technical assistance intended to build human and resource capacity. Evaluation for the RTA will document implementation of the pilot program, provide a mechanism to gather data for continuous improvement and scaling, and support the study of program outcomes. Completing the evaluation planning process collaboratively ensures that the Kansas State Department of Education has the necessary data collection mechanisms in place and provides insights that can inform their decision-making processes around scaling. Evaluation will identify areas of improvement, support the adaptation of program strategies, and enhance the overall impact of the program.

References

[1] Stewart, J., Joyce, J., Haines, M., Yanoski, D., Gagnon, D., Luke, K., Rhoads, C., & Germeroth, C. (2021). Program evaluation toolkit: Quick start guide (REL 2022-112). U.S. Department of Education, National Center on Education Evaluation and Regional Assistance, Regional Educational Laboratory Central. https://ies.ed.gov/ncee/edlabs/regions/central/pdf/REL_2021112.pdf

Katie Allen, Ed.D. is the Co-Director of the Region 12 Comprehensive Center and Co-Lead for Kansas. She assists in the coordination of the Center’s work and supports technical assistance on key education opportunities to state education agencies.